Thinking of data, every use case or product has a need to either store, fetch or process data, but data has very different needs. When you think of data, you need to break down, how much data and when do you need it.

The common thought about data is maybe you open an app and expect some data to be fetched from a server and rendered, e.g. open weather app, what's the temperature outside. How accurate does this need to be and how fast. For example, do you expect to see this within a seconds or minutes of opening the app? Hopefully somewhere in between, but most temperatures do not change dramatically within a few minutes e.g. (80 degrees to 50 degrees). So if you are off by 1 degree, it's not that big of a deal, but this gets into what does it mean to be "real time" or "fast". What is better to ask or understand is what is the tolerance that is acceptable. Do you mean within 1ms, 100ms, 1 second, 30 seconds, 1minute, 5 minutes - what is your “real-time” assumption? With that time constraints, how much data are you expecting to calculate or able to view at a given moment. Are you just looking for the temperature of Pittsburgh, PA or are you trying to pull the temperature in every major city in the united states.

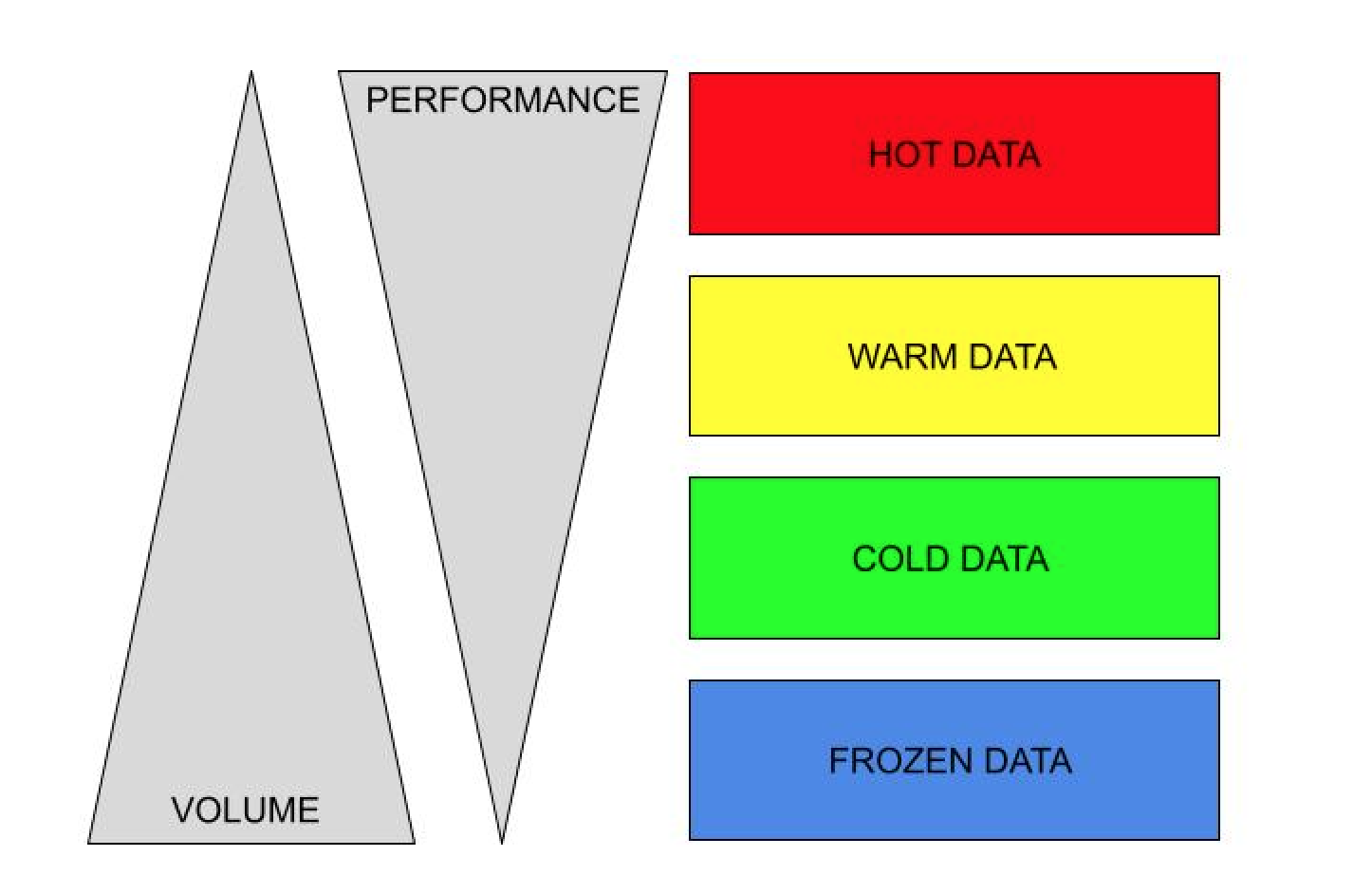

Data can be broken down in a variety of ways, but below should help illustration how you need to think about data. The temperature of the data, the amount or volume of data and the performance of that data.

The temperature is basically the response time based on volume and performance. The volume is how large (storage size) in GB (GigaBytes), TB (TeraBytes) or even PB (Petabytes). The performance is the tolerance of the response time.

Hot data should be extremely performant and possibly leverage a CDN that can provide a threshold of around 1-100ms response time. The volume of data should be store in RAM (e.g. Redis), so maybe under a few GB of data or TBs for large server instances. Think of this as hourly or daily data.

Warm data might be up to a second response and could be thought about as a database (e.g. Postgres) - where you can query large datasets and respond to a web app or mobile app. Think of this as monthly or yearly data.

Cold Data might be more like BigQuery, where you are storing larger amounts of data and can allow for slower performance, such as generating reports or looking up TBs or even PB of data.

Frozen Data might be more like AWS S3 glacier, where you archive data e.g. (data older than 5 years old) and only pull this data for some historic need or record lookup. This might be more of a specific request that a developer or DBA handles as a request from a customer

Although the temperature and assumptions vary, this bottom line is the warmer the data you store, the higher the cost and the colder you store your data is the cost drops.

So why should I care, cost for one, but more understanding scale and how a web or mobile app is getting built. If you build a proof of concept and only use a database like postgres, then you are limiting your performance, but also your cost.

I also want to call out a few ways at “cheating” with data temperature. For example, CDNs are great ways of optimizing and speeding up websites due to edge computing. So rather than 1 server sitting in New York City, you now have hundreds of servers sitting all over the world, which are “clones” of the origin server. By limiting the distance needed to travel from the client to the server, faster responses are possible.

So Cloudflare offers Workers KV, a low latency key-value store. Since this is all happening on the edge, you can store up to 25MB values of data (per key) at 200 locations. Although this might not sound like a lot of data, think “rows” of data. So you could create a “serverless” CRUD app that runs completely on the edge. This is a bit different way of thinking and mostly due to advances in technology but allows you to create a product that is very fast, super cheap and low or no devops. You might also want to check out Durable Objects but it's still in closed beta.

Another opportunity for “cheating” with data temperature is to limit the amount of hot/warm data over time by automatically “offloading” your data to a colder temperature. This is normally done by a cron task. Thinking in terms of time frame, such as hourly, daily, weekly, monthly or yearly you have a wide range of data both from interactions but also for reporting. As time goes on you have more and more data, which can cost a lot to keep warm or even cold based on size. This can easily be done by moving database data to longer term data store (e.g Postgres to S3 or Postgres to BigQuery). Leveraging analytics you can also see what users are doing with your system and where you can create a fast experience for them but longer acceptable time delays for larger requests or historic data.

Lastly I want to call out that not only does data have a temperature, but servers or platforms have a form of temperature based on the infrastructure (CPU, Memory, Storage) or technology stack (serverless). You could build a lambda or GCP function that is only charged on usage, but can take a few seconds to “boot” and have “cold starts” vs “warm starts”. Similar to cost, you could look at things like AWS instances from reserved instances to on-demand and even spot instances. If you want to optimize for cost, you can build your infrastructure on spot instances, but know that at any moment servers can be going up and down and need to separate your data and handle failure first. You could also skip steps and store all data as cold as you may be scraping data that is not processed or reviewed until a later date. This can also help with things like “replaying” data (e.g. crawl data) so you can store a “test” file that allows you to test your platform without actually hitting any external service.